Reinforcement Learning-Based Switching Controller

01 March 2023

Description

This project uses reinforcement learning to control a milliscale robot navigating through constrained spaces—specifically, a simplified model of the large intestine. The robot is a ferromagnetic particle moved by magnetic fields generated by a UR5 robotic arm.

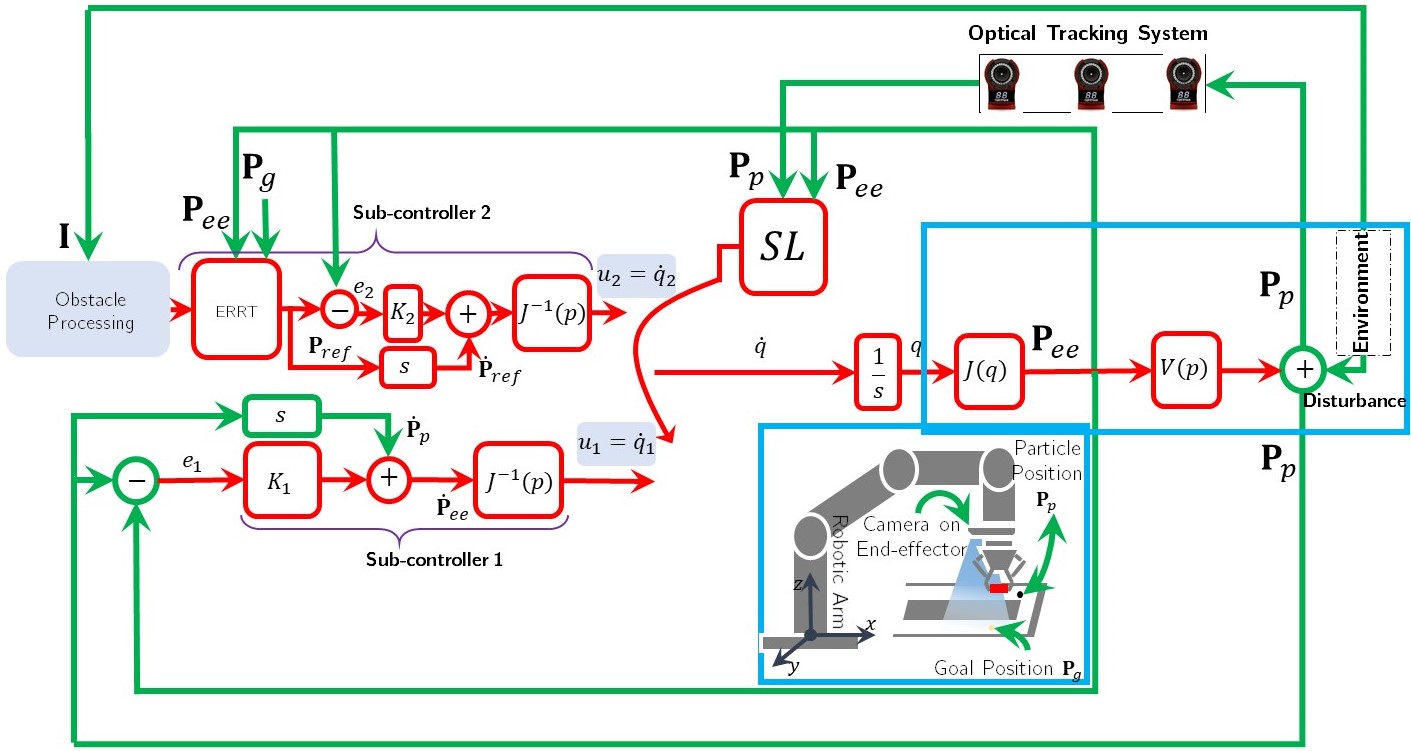

The controller has two parts. The first sub-controller uses inverse kinematics to locate the ferromagnetic particle in the workspace while rejecting disturbances. Once found, the second sub-controller takes over: a customized Rainbow algorithm trained to guide the particle through obstacles to a target position.

Training happened entirely in simulation using a virtual UR5 arm. After training, the controller transferred to the real hardware. The system achieved a 98.86% success rate over 30 episodes with randomly generated trajectories.

To benchmark the RL approach, I compared it against two classical methods: Attractor Dynamics and execution-extended Rapidly-Exploring Random Trees (ERRT). The RL controller matched or exceeded both in performance and handled disturbances better.

This is the first demonstration of magnetic control for milli-sized ferromagnetic particles using an RL algorithm that avoids obstacles and rejects disturbances. The ERRT method needed precise environmental calibration and struggled with dynamic obstacles. The RL approach handled these cases without modification.